Abstract

Improving generalization is one key challenge in Embodied AI, where obtaining large-scale datasets across diverse scenarios is costly. Traditional weak augmentations, such as cropping and flipping, are insufficient for improving a model’s performance in new environments. Existing data augmentation methods often disrupt task-relevant information in images, potentially degrading performance. To overcome these challenges, we introduce EAGLE—an Efficient trAining framework for GeneraLizablE visuomotor policies—that improves upon existing methods by: 1) enhancing generalization by applying augmentation only to control-related regions identified through a self-supervised control-aware mask; and 2) improving training stability and efficiency by distilling knowledge from an expert to a visuomotor student policy, which is then deployed to unseen environments without further fine-tuning. Comprehensive experiments on three domains—including the DMControl Generalization Benchmark (DMC-GB), the enhanced Robot Manipulation Distraction Benchmark (RMDB), and a long-sequential drawer-opening task—validate the effectiveness of our method.

Method

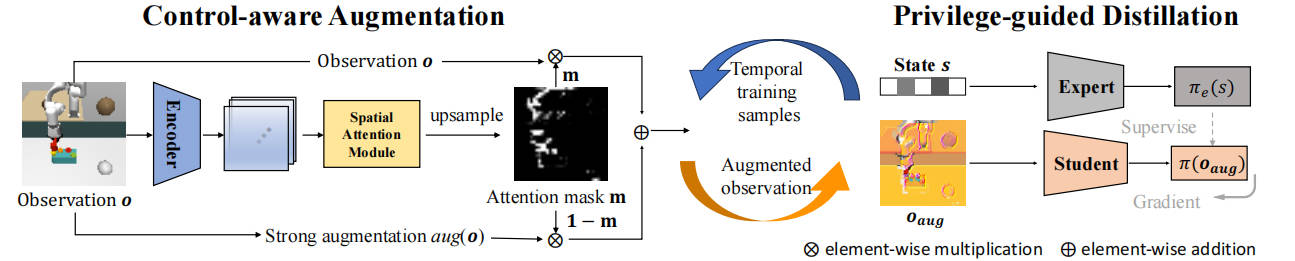

We introduce EAGLE, an efficient training framework for generalizable visuomotor policies. The overall goal of EAGLE is to learn visuomotor policies that are invariant and capable of zero-shot generalization. EAGLE consists of two simultaneously optimized modules: a control-aware augmentation module and a privilegeguided distillation module. The former module retrieves temporal data from the replay buffer and conducts a self-supervised reconstruction task, accompanied by three auxiliary losses, to identify control-related pixels. The latter module augments the observation input and distills knowledge from a pretrained DRL expert (which processes only environment states) into the visuomotor student network (which processes only image observations). After training is completed, the visuomotor policy can be reliable deployed in complex environments with visual variations, without the need for fine-tuning or additional supervision.

Experiments

Experiments on DMC-GB

As shown in Tab. 1, EAGLE achieves an average return of 761 in Hard settings, which is 17.4% higher than previous state-of-the-art method SGQN. EAGLE overcomes visual distraction limitations via control-aware masks that preserves task critical regions while augmenting all irrelevant areas.

Real-world Experiments

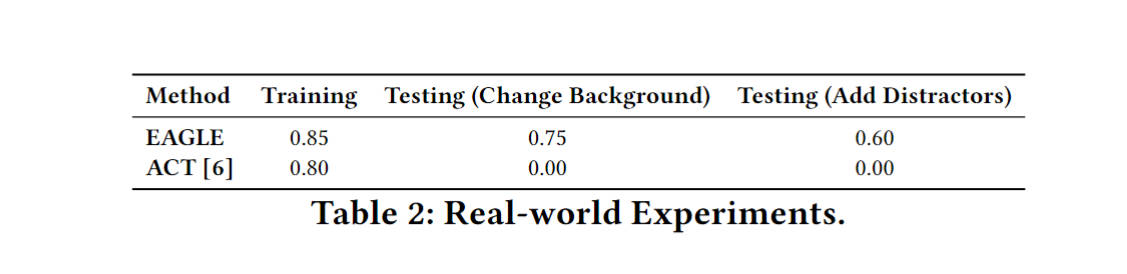

To validate EAGLE in real-world scenarios, we select a typical robot manipulation task of picking bread and placing it on a plate. We used ACT with ResNet50 as the backbone, and combine it with our control- aware augmentation module and Random Conv techniques. We compare the generalization performance of EAGLE and ACT by averaging results over 20 trials with varying bread positions. As shown in Tab. 2, EAGLE significantly improves generalization when facing with changing backgrounds and added distractors, whereas the baseline ACT lacks such generalization without proper data augmentation.

Training

Testing

(Change Background)

Testing

(Add Distractors)

Conclusion

In this paper, we address the generalization challenge of visuomotor policies in the face of visual changes. We propose an Efficient trAining framework for GeneraLizablE visuomotor policies (EAGLE) designed to identify control-related regions and facilitate zero-shot generalization to unseen environments. EAGLE comprises two jointly optimized modules: a control-aware augmentation module and a privilege-guided policy distillation module. The former leverages a self-supervised reconstruction task with three auxiliary losses to learn a control-aware attention mask, which distinguishes task-irrelevant pixels and applies strong augmentations to minimize generalization gaps. The latter distills knowledge from a pretrained privileged expert into the visuomotor policies. We conduct extensive comparative and ablation studies across three challenging benchmarks to assess the efficacy of EAGLE. The experimental results well validate the effectiveness of our approach.